Our 2019 Customer Relationship Quality Results

A big Thank You to all of our clients and channel partners who completed our CRQ assessment this year! It provided us with a wealth of feedback. We are humbled that you have given us such positive scores and we are thrilled with both the overall results and the detailed responses that we received.

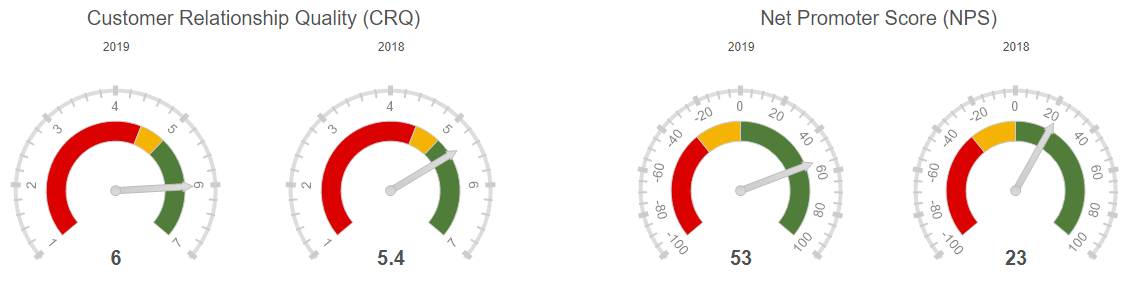

In summary: we had an overall completion rate of 49%, a CRQ score of 6.0 and a Net Promoter score of +53%. This is the strongest set of scores that we have ever received.

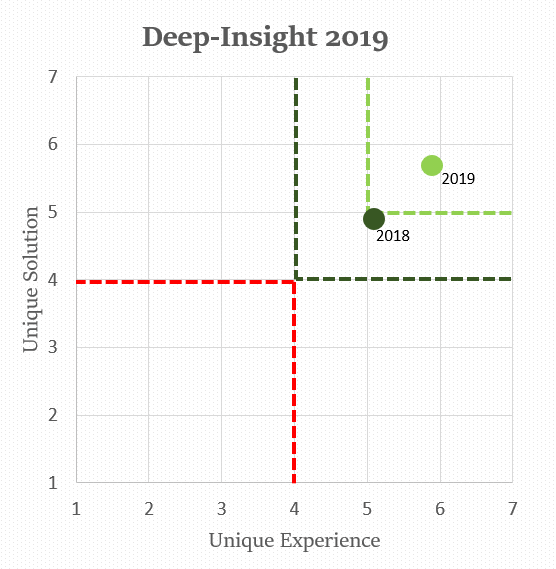

Regaining our Unique Status

Our scores this year mean that we are now back into ‘Unique’ territory, which we just missed out on last year. Uniqueness requires a combination of a winning ‘Solution’ and a great ‘Experience’. Last year, our ‘Solution’ scores had slipped and over the past 12 months we have been working hard to regain this ‘Unique’ status. It’s very gratifying to see all of our hard work paying off.

Deep-Dive: New and Improved

We have reflected a bit on last year’s journey for Deep-Insight and why we regained our Unique status. As a result of your feedback last year, we took your comments on board and put together a plan to upgrade our Deep-Dive platform. As a result, we have recently rolled out Deep-Dive v1.1 which is faster, has more features and allows our clients to access individual account reports at a click of a button. The work that the development team put into Deep-Dive has paid off as we have seen our ‘Solution’ scores increase from 4.9 to 5.7 this year and we have already received some very positive feedback regarding our upgraded Deep-Dive platform.

Our Plans for 2019?

Even though we received an amazing set of scores this year and we are thrilled with the results, that does not mean we will take a break. We have put our heads together and came up with the following action points for the upcoming months.

No. 1 – Share Results with All Clients – and Create Joint Action PLans

We tell our clients to share their results with their customers as this is a very effective way of building strong relationships. In the past we have sometimes been guilty of not taking our own advice but this year we plan on doing exactly that with all of our clients. Expect us to reach out to you in the very near future so that we can review your feedback together and see how Deep-Insight can be more effective this year at helping you achieve your 2019 objectives.

No. 2 – More Support with Account Management

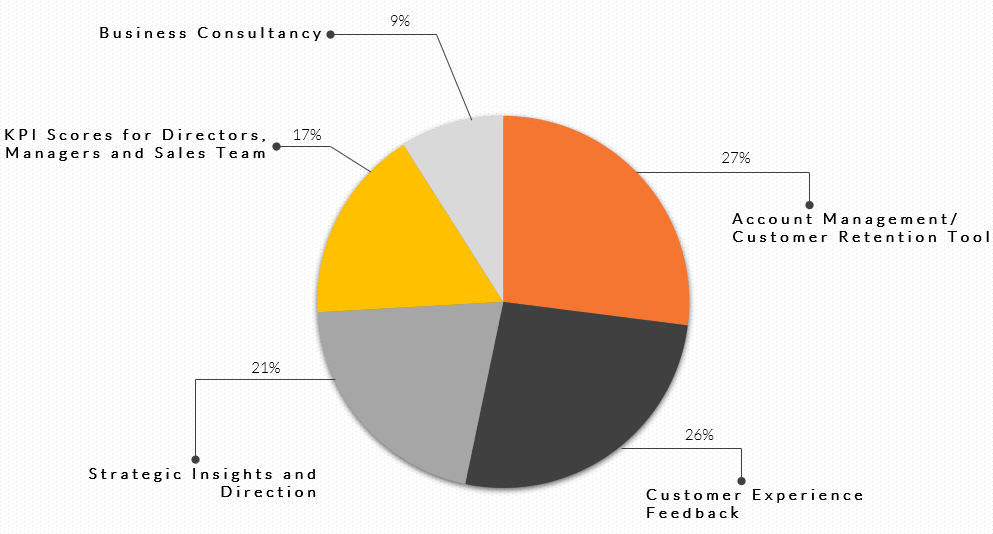

This year we asked you to what you use our services for. The answer? You use Deep-Insight and primarily as an Account Management/ Customer Retention tool, followed closely by Customer Experience feedback.

The message for us at Deep-Insight is that we need to spend more time with our clients at the start of any assessment to understand how you segment your client base, how you allocate account managers and service teams to those accounts, and how we can help you get more account-based insights from using the Deep-Dive platform. We also need to be more supportive in helping you use the results to manage those accounts more effectively. This is one area we would specifically like to explore with each of you in the coming weeks.

No. 3 – Aim for a Higher Response Rate in 2020

This is more of an internal action for us at Deep-Insight. A 49% response rate is not bad but we know that some of you set targets of 60% or higher, and you achieve them. We will be aiming for a 60% completion rate in 2020. We always advise our clients to work with their account teams to achieve the highest response rate possible, so for next year we will definitely put a stronger focus on this for ourselves as well.

Thank you again for your time and input into this year’s customer assessment. We will be in touch shortly.

John O’Connor

The next question we get asked is “Is it really that high?”

The next question we get asked is “Is it really that high?”