The B2B Blindspot: Why NPS Isn’t Enough

Why do so many B2B CX programmes fail?

Later this year, Bert Paesbrugghe will be hosting a LinkedIn webinar called The B2B Customer Success Blindspot: Why NPS Isn’t Enough. It sounds like it will be a good session and I have cheekily borrowed his title for this blog as it got me thinking about some of the reasons why B2B companies set up customer experience (CX) or Net Promoter Score (NPS) programmes in the first place.

More important, it’s worth reflecting on why these CX and NPS endeavours often fail to deliver on their initial promise. And that’s the sad truth – many of these programmes fail to improve the service delivered to customers. They don’t succeed for a variety of reasons. One of these is the belief that Net Promoter Score is a silver bullet for solving all manner of customer woes.

It’s not. That’s the blindspot. NPS is not enough for B2B companies.

B2B is different

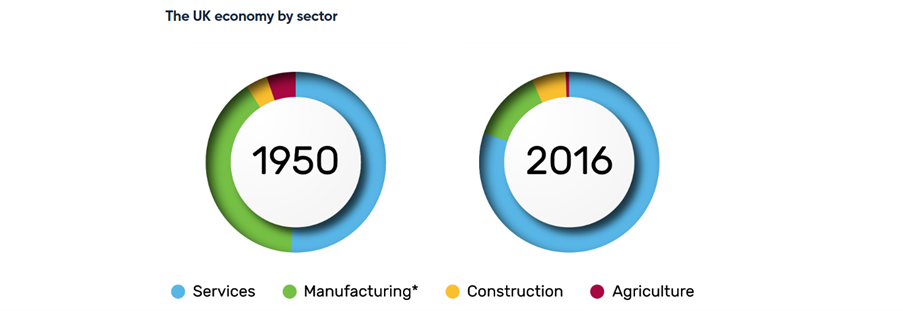

The first thing to mention is that the Business-to-Business (B2B) world is VERY different to its Business-to-Consumer (B2C) counterpart.

The consumer world is all about the 4Ps: Product, Price, Place and Promotion. Marketing guru Philip Kotler popularised the 4Ps back in the 1960s. They were a core part of his Marketing Management book that many of us still have on our shelves today.

My only real problem with the 4Ps model is that it’s essentially a B2C concept. It doesn’t cover the subtleties of the B2B world where very often a service provider is delivering a very complex service across multiple locations – often in different countries. This is a world away from selling and marketing consumer products such as Mars Bars or Mercedes cars.

The 4Ps also don’t take into account the need for key/ global account management or the associated challenges of building and maintaining relationships with multiple decision makers and influencers across large global organisations.

NPS is one-dimensional

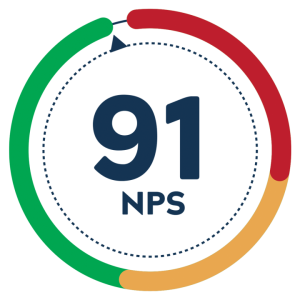

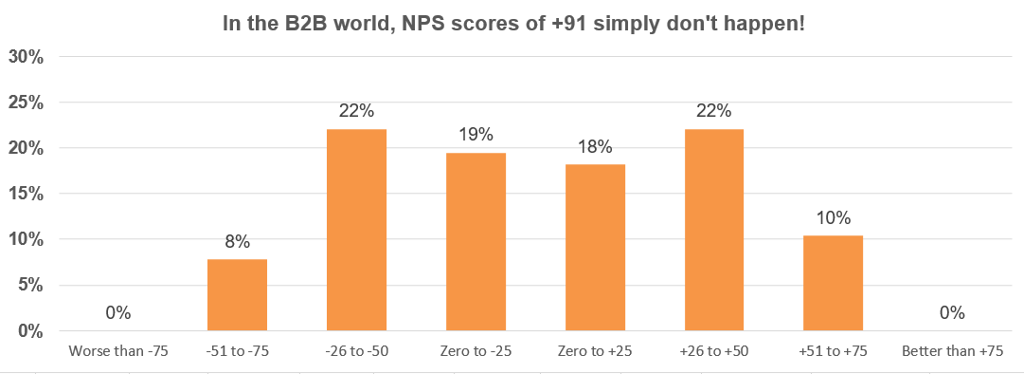

Net Promoter Score has proven to be one of the most durable metrics in management, ever since its invention by academic and business consultant Fred Reichheld more that two decades ago. Reichheld’s basic premise was that you only need to ask one question in order to understand if a customer is going to stay loyal to you or not. The question is: “How likely are you to recommend us to a friend or colleague?”

Fred, an excellent marketeer, promoted the benefits of his Net Promoter Score (NPS) concept in publications like the Harvard Business Review. He then proclaimed its merits in his 2006 book The Ultimate Question. Since then, NPS has became a hugely popular metric for customer loyalty and customer experience.

I’ve written about NPS before and, in general, I’m a fan of the metric for both its simplicity and its popularity. Sure, it’s not perfect, as Professor Nick Lee points out. But then again, is there a perfect KPI for anything? Let’s agree that Net Promoter Score has its place and is worth measuring even if it is a little one-dimensional.

So NPS is good, but much more is required, particularly in the B2B world with all of its complexities, peculiarities and challenges.

Why NPS is not enough (in B2B)

Let’s go back to basics here. B2B IS different. So let’s recap on what some of those differences are:

- Customer Base. Consumer brands like Mars Bars and Mercedes cars are sold to millions of individuals. Three million sold every single day, in the case of Mars Bars. In contrast, we work with B2B clients that generate annual revenues of more than €1bn from fewer than 100 clients.

- Value. A Mars Bar costs around €1.60 at the time of writing (let me know if you can source them cheaper!) while an outsourced IT contract can be worth €100m. Admittedly, a Mars Bar can be consumed in less than five minutes while a €100m contract might take five years to consume. But you get the picture: value and Value For Money are very different in the B2B and B2C worlds.

- Marketing Strategy. We talked earlier about Kotler’s 4Ps. While the consumer world is all about Product, the B2B world is more around Service and Relationships. Even in today’s AI-enabled world, those services are still delivered by people. Relationship-building is a critical component of the marketing mix the B2B world.

- Sales Focus. In the consumer world, merchandising and point-of-sale advertising are key. In the B2B world, far more emphasis is placed on educating the customer about features, benefits, return on investment, and so on. This is still mainly done through personal contact and relationships.

- What to Maximise? The consumer world is about the transaction – promoting those Mars Bar at the point of sale, for example. Customer lifetime value (CLV) is rarely if ever mentioned in the consumer world. CLV is arguably the most important thing to maximise in the B2B world as it typically takes 2-5 years to recover the initial sales cost of a major multi-year contract win.

- Buying Process. In a supermarket, buying a Mars Bar is a split-second decision. Even for a Mercedes, the decision can be quick. Clinching that 5-year outsourcing deal can and does take years from beginning to end. It also involves multiple decision-makers and influencers.

- Buying Decision. In the consumer world, decisions are often made on emotion – hence the importance of brand and image. In the B2B world, we like to think decisions are made on rational grounds, based on cleary-defined evaluation criteria.

What else is needed?

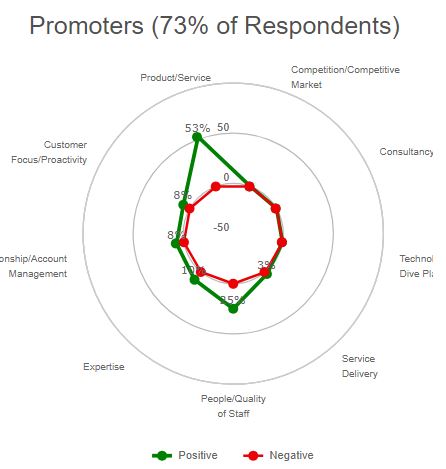

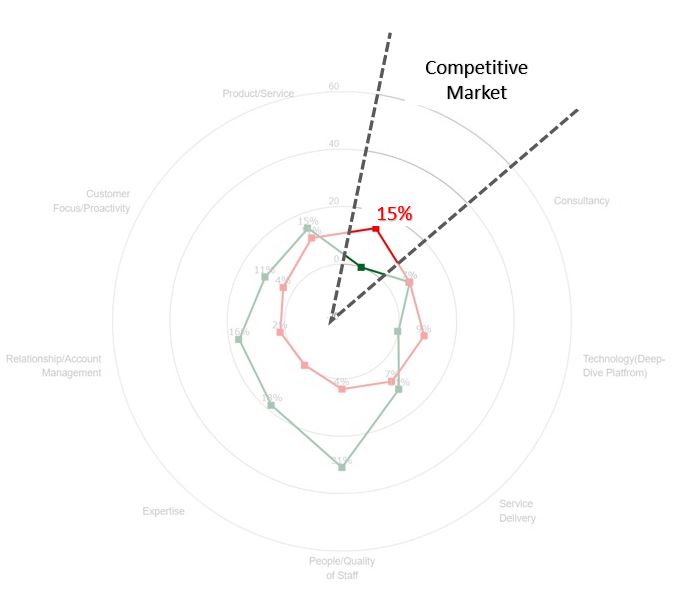

Let’s assume we have just sold a 5-year outsourcing deal to a client and we are now in the onboarding or delivery stage of that contract. Yes, it’s useful to know if our client would recommend us to a friend or colleague. That’s the Net Promoter question, but is it enough?

Not really. Ideally, we need to know much more. For example, do our clients trust us now that we have started working for them? Are they committed to us for the long term? Are they happy with the service that they are now receiving?

These are just some of the questions that we need to ask our B2B clients in a systematic way. We need answers at an aggregate level but we also need feedback at an account level. Contract A may be going swimmingly. Contract B may already be on the rocks (to continue the theme) but we might not know that if we are only getting aggregated client feedback.

Eliminating the blindspot: Customer Relationship Quality (CRQ)

An alternative to asking the one-dimensional NPS question is to view the customer relationship more holistically. That’s where Customer Relationship Quality (CRQ) fits in.

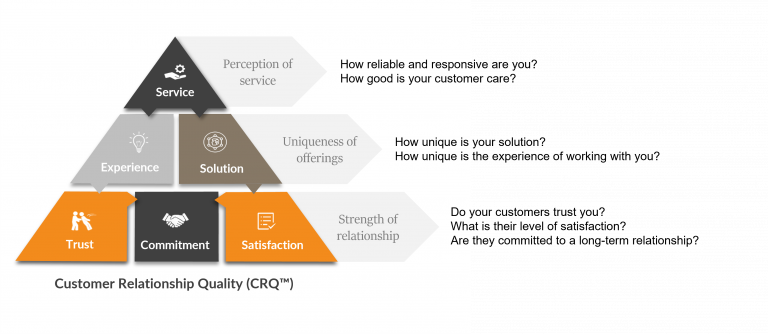

CRQ can be visualised as a pyramid comprised of three different levels.

- The first and most fundamental is the Relationship level. Do your clients trust you, are they committed to a long-term relationship with you, and are they satisfied with that relationship?

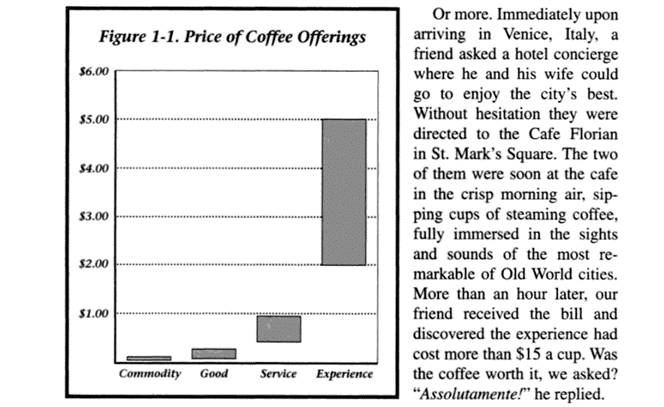

- The second is the Uniqueness level. Do your clients view the experience of working with you, and the solutions you offer, as truly differentiated and unique? Do they see us as good value for money?

- At the top of the pyramid is the Service level. Are you seen as reliable, responsive and caring? Get this wrong and you will never be seen as Unique and you will struggle to build a long-term relationship with that client.

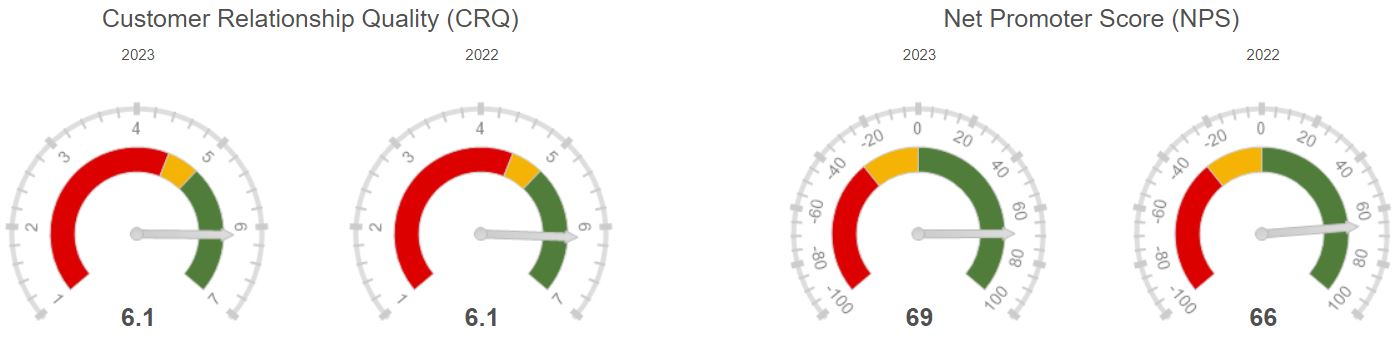

Interestingly, CRQ and NPS scores are highly correlated. If you score well on all six elements of this Customer Relationship Quality (CRQ) model, your clients will act as Ambassadors, generating a high NPS result for you. However, CRQ gives you so much more information to act upon, and that’s far more important.

The most important part: Action

The CRQ model above was specifically designed for the B2B world. That said, it really doesn’t matter what questions you ask your clients if you fail to do anything with their feedback.

The most important part of any NPS, CRQ, CX or client listening programme is the ‘Action’ piece. The reason that many customer programmes fail to deliver is that they are run by the Marketing department (sorry guys and gals!) while the members of the company’s Senior Management Team have collectively washed their hands of any responsibility for acting on that client feedback.

In most B2B organisations, key client relationships are owned by Sales. In some cases where delivery is an ongoing function, it’s the Service or Operations functions that have most of the day-to-day client contact. It’s rarely, if ever, somebody from Marketing. The Sales Director (or Service/ Operations Director) needs to own the ‘Close The Loop’ element of the programme. It’s unfair to expect Marketing to take responsibility for it.

Put it another way: it’s madness to think that Marketing can effect change on its own. That’s a Leadership function. I’ve never seen a successful NPS ar CX programme that has not been driven from the top. So regardless of what you think of NPS as a B2B metric, don’t assume that NPS or any other set of survey questions is going to improve your top line or your profitability. It won’t, unless there’s follow-up action. That action needs to be managed systematically, and it needs to be driven by the SMT or Executive Team.

Finally, do remember that it’s not about the score. It’s about using that valuable client feedback to take action and become more customer-centric. That’s how you generate more revenues and boost profits.