What Does Net Promoter Score Actually Measure?

Interview with Professor Nick Lee

Some weeks ago, I met Nick Lee, Professor of Marketing at Warwick Business School to discuss his views about Net Promoter Score (NPS). I specifically wanted to get Nick’s views on NPS as a measurement tool. Does it work? Is it linked to sales growth? What does Net Promoter Score even measure?

Nick has an impressive background. In fact, The Academy of Marketing has already honored him with Life Membership in recognition of his outstanding lifetime research achievements and contribution to marketing scholarship. He is currently the Editor-in-Chief of the Journal of Personal Selling and Sales Management (JPSSM), which is the premier journal for research in professional selling. He is the first UK academic to hold this position, and only the second ever from outside the US. Nick was also the Editor in Chief of the European Journal of Marketing from 2008-2018.

Nick is more than just an academic. He holds strategic advisor positions for a number of innovative sales and leadership development companies, and he was part of the All Party Parliamentary Group inquiry into professional sales in 2019. His work has been featured in The Times, the Financial Times and Forbes, and he has appeared on BBC Radio 4, BBC Radio 5Live, and BBC Breakfast television.

Net Promoter Score: 20th Birthday

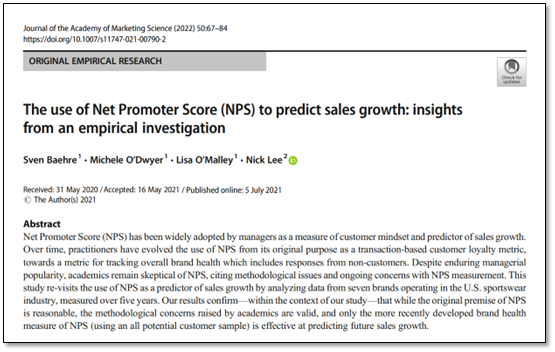

What prompted me to interview Nick Lee was a recent academic paper he was involved in called “The use of Net Promoter Score (NPS) to predict sales growth” which was published in the Journal of the Academy of Marketing Sciences (JAMS) in 2022.

Our discussion was very timely as Net Promoter Score is a metric that was invented by Fred Reichheld, a partner at Bain & Company, 20 years ago this year.

Over the past two decades, NPS has divided opinions. While it has been embraced enthusiastically by many businesses, it has been shunned by others. The academic world has questioned what Net Promoter Score actually measures.

I think you’ll find Nick’s comments on Net Promoter Score and what it really measures quite fascinating because he doesn’t hold back from his criticism of NPS but also points out that the flaws don’t invalidate its usefulness as a measurement system, as long as it’s used in the right way: tracking changes over time, rather than simply chasing a number.

The Interview

John:

Good morning Nick. To start, could I ask you to tell me a little about your own academic background. What was your first interest in the field of marketing?

Nick:

Well, I began my academic career as a doctoral student in marketing strategy. It seemed to me that the connection between sales and psychology was quite important. And there was a lot of work in management that was related to psychology, but very little of that research had been focused on the sales force.

A lot of sales research is actually about things like incentive structures and territory design. I call that ‘technical management’ but what I was more interested in was not so much the decisions that managers made, but how they implemented those decisions. Sales management is more about psychology than a mathematical or technical thing. More recently, we’ve seen how digital transformation has led to a a merging of the ‘technical’ things with the more psychological things, and that’s really the space I operate in now.

Is NPS a Fundamentally Flawed Metric?

John:

So let’s talk about the psychology of Net Promoter Score. It’s clearly a sales and marketing concept. It’s also a performance metric. When did you start getting involved with Net Promoter Score and is it a good sales and marketing measurement tool?

Nick:

My interest in NPS really came from Sven Bähre and that paper we wrote called The use of Net Promoter Score (NPS) to predict sales growth. Sven drove that project while my role was to use that project to address something that was important to the marketing literature. And I think it is very interesting that academia’s gone down one road with Net Promoter Score, the very simple road which says “NPS is useless and a load of rubbish”.

At the same time, business practice has completely ignored that academic view. Net Promoter Score has become the dominant customer metric in business. It feels like someone has to be right and someone has to be wrong here. But the interesting thing is it turns out that both sides are right. They’re just talking about different things. And that’s what fascinated me.

John:

So tell me about those different things. When I looked at Net Promoter Score many years ago, a guy called Tim Keiningham – one of the people you refer to in your paper – was very critical about NPS as a metric. At the time he worked at Ipsos so I wondered if he was bringing his own biases to the table. But at the same time, he was saying that the data did not show any link between NPS and sales growth.

Nick:

Oh, that’s interesting about Keiningham, I didn’t know that he was at Ipsos then. So there are a couple of issues that lead to this disconnect. One is that we generally don’t like it when a publication like the Harvard Business Review tells us there’s a single number that every company needs to look at. That automatically gets people’s interest and it actually didn’t make sense to me.

The other issue is, and I have every sympathy with this view, is that Net Promoter Score doesn’t really measure what it claims it measures. There are so many potential flaws in the idea that this one number could be a valid measurement of anything real. I spend a lot of time trying to develop measures around attitudes and psychological concepts. And this is a classic example of a metric that doesn’t seem to actually ‘measure’ anything. So on that basis, it is quite flawed.

When you add in the idea that you have to subtract the bottom scores (Detractors) from the top scores (Promoters), you're torturing the measure to within an inch of its life.

What does NPS Actually Measure?

John:

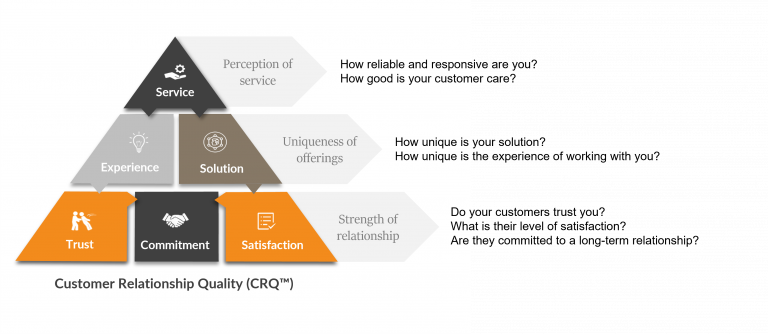

Surely Net Promoter Score is a measure of advocacy, if nothing else?

Nick:

To some extent it taps into advocacy, sure. However, it’s a number in response to a single question that doesn’t take account of all kinds of other factors that might be relevant. And then you have this weird calculation for subtracting ‘Detractors’ from ‘Promoters’. As a mathematical construct, that’s not great. But the real issue is that advocacy is a much more complicated idea and can’t really be accurately captured by a response to a single question.

So there’s no real evidence that that answer to the NPS question is a reliable measure of advocacy. And then when you add in the idea that you have to subtract the bottom scores (Detractors) from the top scores (Promoters), you’re torturing the measure to within an inch of its life. At that point, it ceases to become a measure anymore, even if it was at the beginning. It becomes a number which is divorced from the underlying concept.

John:

I’m with you. But does that invalidate it completely as a measure?

Nick:

Well, here we get to the bigger question: rather than “is NPS a measure”, we need to ask “is NPS actually useful?” In academia. we’ve said nothing about NPS for the last 20 years apart from “it’s crap”. But when I see a whole bunch of senior executives in large companies saying “well, I’m finding a use in it”, then academia needs to look at that.

What’s my conclusion? I think that is a problem for academia insofar as we tend to talk past each other in a lot of areas. We have to provide some insight into what practitioners are doing in this field. Of course, it’s our sole driving force as a discipline to find out what practice is doing and we study that. But at the same time, it is worth studying if the entire business community is using something that 20 years ago we in academia said was wrong.

John:

Have you come to any conclusions as to why senior leaders use Net Promoter Score, or how they can use it more effectively?

How Should NPS be Used?

Nick:

One reason why it’s used so much is partly a self-fulfilling prophecy. It’s used because everyone uses it and therefore nobody wants to not have that information. That’s an important factor.

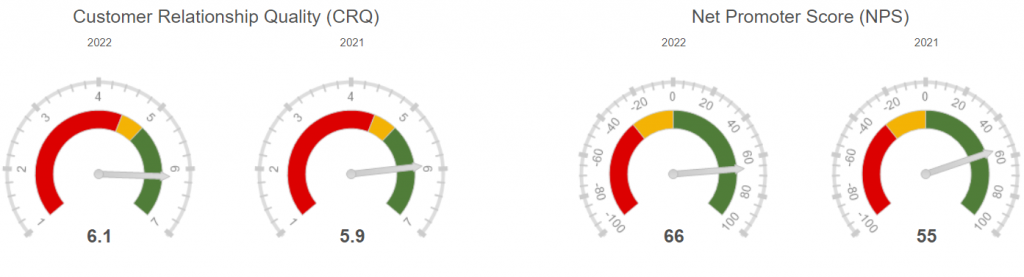

But then the other aspect is that it’s used because it’s simple. It’s easy to collect and it’s simple to use. Whether it’s easy to interpret is actually a more challenging question. I don’t think it is that easy to interpret. For starters, what does the NPS number actually mean at any given point in time?

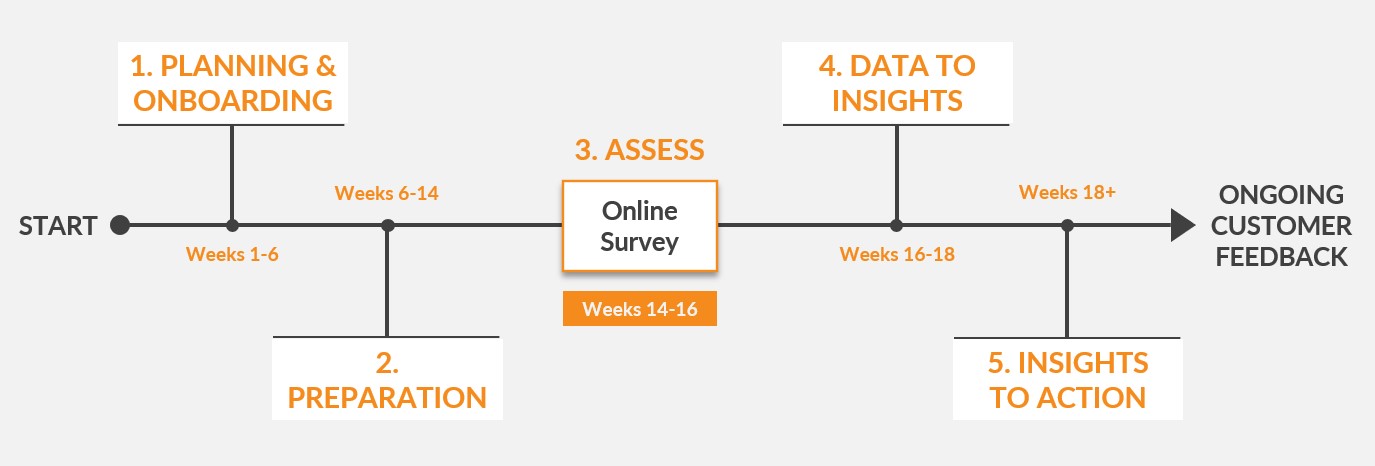

Now when you start tracking NPS over time, those questions fall away because what you’re looking at is trend data. What was it last year? Is it up or is it down? You have to operationalise NPS in the right way – by tracking the change in NPS score from one period to the next, not the absolute score. That’s important.

Net Promoter Score is also influenced by a lot of transient factors. For example, it’s very easy to manipulate and there’s a big selection bias. Who is asked to complete the NPS survey? Also, every surveyor cannot help but lead the customer on towards a higher NPS score. So at any given point in time, the net promoter score doesn’t mean much because of that selection bias. But if you assume that those forces are broadly the same over time, you can extract that little bit of signal from that noise with the time series a little bit more effectively than at a single point in time.

John:

I’m with you. But in our experience, the level of bias can increase over time. So you need to have a governance structure to ensure consistency. Or indeed, you may need to break the system apart and start again if the ‘gaming’ gets too deeply ingrained.

We should track trends, not individual time points. And the more data we have, the better. More bad data isn't better than less good data. But more flawed data is probably better than less flawed data.

Nick:

Yeah, I think you’ve got it right there. It is important not to be naïve that over time there might be an ‘instrument effect’ or a ‘history effect’ where people learn how to better game the system. I think with something like Net Promoter Score, that’s less of a thing because it’s pretty easy to know how to game the system straight away. And the only thing you can do really is try to say to your customers: “this is really important to me, can you please leave me a good score?” And there’s only so convincing you can be there. It’s not like you’re going to get better at doing that after a certain point in time. So I would be less worried about that.

But of course there are always ways to game the system. But the point is we should track trends and not individual time points. And the more data we have the better. Of course more bad data isn’t better than less good data. But more flawed data is probably better than less flawed data. So given we assume the data is flawed all the time, the most important thing is to know how that data is flawed. And while you can never perfectly extract the signal from the noise, the signal is there if you have enough data points gathered over a sufficiently long period of time.

Where Do We Go From Here?

John:

So where do we go from here, and where should academics be focusing their efforts?

Nick:

A few things for us to work on. First is international comparability. Big multinationals use Net Promoter Score across their different national areas. And I would imagine they’re comparing EMEA with America with Australasia. Is NPS really able to support that comparison? That’s a challenge so that’s the first thing I would look at.

Second is to move away from a single question. We really need multiple measures in order to compare them statistically across different cultures. So Net Promoter Score is one item. You would like it to be three or four items. And then you could compare those items across countries.

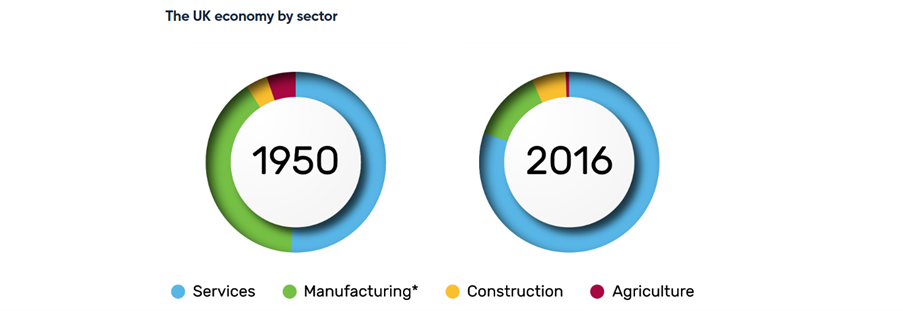

A third area is to get a wider industry perspective. We looked at NPS in a branded consumer goods context: sportswear. Is it equally useful across all kinds of different industry sectors? Particularly if you look at the service sector and front line services, which are linked to business to business (B2B) personal selling. Is NPS a useful metric for these interpersonal interactions? How well does it work in a B2B setting?

John:

Nick, I really appreciate your time today. I’m looking forward to seeing more research into Net Promoter Score. From a selfish perspective, I’d particularly like to see some B2B research done as there’s very little out there that I can find on the topic. Thanks again, Nick.